I wanted to find out what people were saying on the facebook pages of extreme political parties. At this stage I wasn’t bothered so much about what the party was saying, but what the comments on the posts where saying. The task was to find n-grams in a CSV file and I decided to do it in R. Originally the CSV was created from comments on 10 pages of BNP Facebook posts, I generated the CSV quite quickly using an application called FacePager, it was very easy to do and if you are interested you can find instructions on this post here.

The final script is here and is quite easy to follow:

options(mc.cores=1)

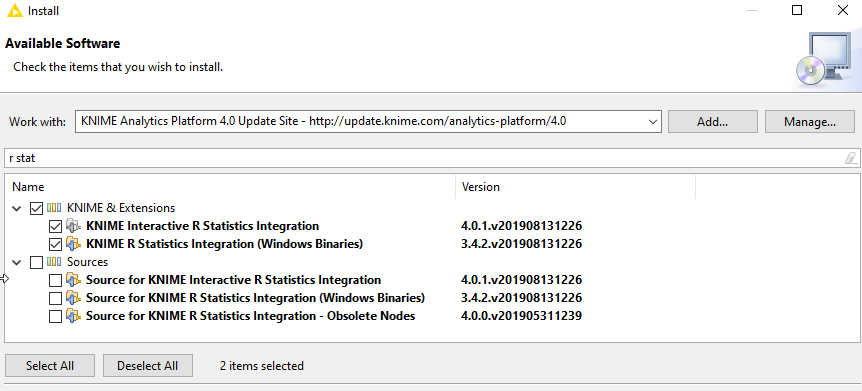

#import packages

install.packages("tm")

install.packages("RWeka")

install.package("slam")

library(tm)

library("RWeka")

library("slam")

#import csv

mydata = read.csv("bnpwcomments.csv", sep = ";") # read csv file

#prepare text

corpus <- Corpus(VectorSource(mydata$message)) # create corpus object

corpus <- tm_map(corpus, mc.cores=1, removePunctuation)

corpus <- tm_map(corpus, removeNumbers, mc.cores=1)

corpus <- tm_map(corpus, removeWords, stopwords("english"), mc.cores=1)

# convert all text to lower case

corpus <- tm_map(corpus, tolower, mc.cores=1)

#proplem with to lower means we need to make it type of plain text document again

corpus <- tm_map(corpus, PlainTextDocument)

#make the term document matrix

tdm <- TermDocumentMatrix(corpus)

#find the frequent terms

findFreqTerms(tdm, lowfreq = 500)

#tokenizer for tdm with ngrams

BigramTokenizer <- function(x) NGramTokenizer(x, Weka_control(min = 4, max = 4))

tdm <- TermDocumentMatrix(corpus, control = list(tokenize = BigramTokenizer))

findFreqTerms(tdm, lowfreq = 15)

#create dataframe and order by most used

rollup <- rollup(tdm, 2, na.rm=TRUE, FUN = sum)

mydata.df <- as.data.frame(inspect(rollup))

colnames(mydata.df) <- c("count")

mydata.df$ngram <- rownames(mydata.df)

newdata <- mydata.df[order(-count),]

newdata<-newdata[order(newdata$count, decreasing=TRUE), ]

To change the number of words in the sequence you want to look for this line and change the number to whatever you want:

BigramTokenizer <- function(x) NGramTokenizer(x, Weka_control(min = 4, max = 4))

I haven’t finished playing with the data yet, but if you are interested I have dug the most popular phrases out, it is quite obvious that ‘at the end of the day’ to the BNP it’s a case of us Vs them.

3 Word phases

Count Phrase

156 in this country

130 all the way

103 a lot of

99 bnp all the

89 to do with

87 got my vote

85 in our country

80 in the uk

78 dont like it

78 if you dont

75 the bnp are

75 the rest of

75 the right to

73 we need to

72 the british people

4 word phrases:

Count Phrase

49 in our own country

47 nothing to do with

43 if they dont like

39 if you dont like

35 the rest of the

34 the end of the

32 have the right to

30 if you want to

30 in the first place

30 they don’t like it

29 at the end of

29 end of the day

28 in the name of

28 this is our country

26 our way of life

26 send them all back

5 word phrases:

Count Phrase

26 at the end of the

26 if they dont like it

26 the end of the day

16 has nothing to do with

14 if you dont like it

12 for the sole purpose of

12 sole purpose of child exploitation

12 the sole purpose of child

11 bring back the death penalty

6 word phrases

Count Phrase

25 at the end of the day

12 for the sole purpose of child

12 the sole purpose of child exploitation

8 any plans for you to review

5 for you to review cannabis laws

3 Comments

Lorna M. Campbell · June 19, 2014 at 5:29 pm

Fascinating! It would be interesting to compare this data to other political parties to see if there is any noticeable difference.

David Sherlock · June 19, 2014 at 10:29 pm

I wondered about that, I think it’s a good idea . Wonder if we can use data mining to explore what sparks extremism.

More reoccurring phrases in the Facebook comments section of political parties | David Sherlock · June 30, 2014 at 3:58 pm

[…] while ago I used a combination of Facepager and R to find reoccurring phrases in the comments section of the BNP’s Facebook page. I had this […]